Graphic Processing Unit. You may have heard your gaming nerd friend talk about how his (or her) PC is the ultimate gaming machine and drone on about the components that power it. And you think, “meh, why would I spend on that?” Granted. That is actually true for the average users chilling on their couch wanting to watch GoT on their desktop streaming app, print the odd plane ticket and edit a diet plan in Excel that will gather digital dust in a corner of the hard drive. Most of us may not be the average supergamer.

I hope that is not your friend. Or you. (Courtesy South Park)

But, just for a perspective on how computationally intensive our demands have become, check out this comparison between two versions of a popular game (Injustice by Netherrealm); notice the cape. (Courtesy ScereBro PSNU here):

Holy Scalable Vectors Batman! You’re so shiny and new!!

The amount of matrix math that the GPU does to resolve the images in the game referenced above is astounding. Yes, the same math with weird number-filled brackets you hated doing during school. I won’t bore you with the specifics but every facet of the 3D Batman would have coordinates in space (stored in matrices) and for every interaction, it would calculate how those facet pixel values change. And the resulting Batman render looks natural and smooth in various environments. Because.. he’s Batman! But mostly because your laptop has dedicated RAM to the GPU for this.

But what if I’m not a gamer, you ask. Reasonable question. A lot has changed since the 2000’s and early ‘10’s. We have come far in democratizing high performance computing (HPC), machine learning & AI (don’t even get me started on how people confuse those), virtual servers and the like. The architecture behind those technologies relies on what is called parallel processing. It’s just what it sounds like: splitting jobs between different cores on a computer or between different computers to drive efficiency and this was exactly the premise presented by gaming processor companies like Nvidia and ATI. It was for gaming then, but if you check out Nvidia’s website today, they do as much work with AI!

That’s right, matrix math, copious amounts of it. It’s the same thing your computer will have to do as you demand smarter analytics from your business or in your company from the gigantic tsunami of data generated from your activities and customers. Finding out which customer bought how many lube change services won’t be enough. You will want to correlate it to which model, make and year of vehicle they drive, which season and time of day they usually enter your shop, how soon they leave and what else they pick up at the counter. This is the edge that will help you get new customers and retain current ones by making the right investments. And all this will be powered by huge databases that may reside in a low cost cloud server But sometimes you may need on-premise analytics (for privacy or convenience or legal reasons) and this is where the GPU really helps. GPU’s are not cheap and you can’t get a great GPU enables computer for $500 but the good news is more companies are getting into analytics, laptops should get cheaper as more people analyze their own data and analytical tools are democratized.

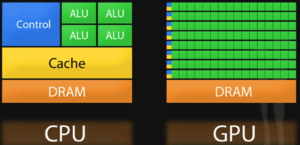

This article explains GPU performance vis-à-vis CPU’s really well, but if you want to skip to the fun part, the super entertaining MythBusters explain it better than I ever can in just one video. Basically, a CPU spends

more resources on caching and control of the data, (more managing) whereas the GPU spends the same on just doing. Nvidia’s own page on it, albeit with a marketing tinge, explains why we need them. (Courtesy NYU-CDS, NVIDIA and MythBusters).

Who knew the gaming tech would one day be used to create graphs for lube change shops?

And don’t forget to recycle your PC with us!